Intel's Instruction Parallelism is 3.67× Better Than AMD's. It Still Lost.

What CPU Cache Really Reveals About Intel vs AMD Performance (Part 2)

Parts 1 and 2 covered the foundation and core tests. Part 1 showed memory dominance and latency measurement. Part 2 revealed cache boundaries (AMD’s 2.4× cliff), Intel’s 3.67× ILP advantage, a failed associativity test, and the devastating 13× false sharing penalty on Intel vs 6× on AMD.

The key findings so far:

Test 1: Memory access patterns matter more than arithmetic (300 cycles vs 1 cycle)

Test 2: Pure latency is similar (Intel 1.49, AMD 1.57 cycles/element)

Test 3: AMD has steeper cache cliffs but better L3 performance

Test 4: Intel’s instruction parallelism is 80% better than AMD’s

Test 5: Failed to measure associativity—prefetchers are too smart

Test 6: False sharing penalty: Intel 13.25× vs AMD 6.40× (the most critical difference)

Now for the final test, the verdict, and what all this means for your code.

Test 7: Hardware is Weird

The final test demonstrated something more philosophical: hardware is too complex to predict.

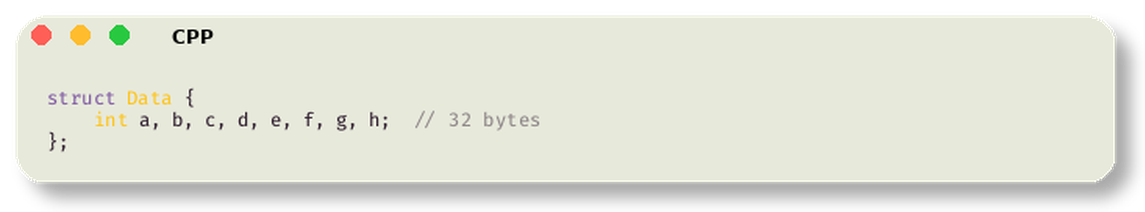

I accessed fields in a struct in different orders:

Same number of operations, just different access orders:

Assembly Verification: Identical Structure, Different Offsets

Do these patterns compile differently? Let’s examine the assembly: